AI, ChatGPT and its implications for life and work

Alan Turing (1912-1954), a British mathematician, is considered by some to be the father of theoretical computer science and artificial intelligence. Chances are the late genius would be fascinated by recent advances in AI, particularly Microsoft's ChatGPT, a new AI technology that can understand human speech and generate human-sounding, detailed content...

Alan Turing (1912-1954), a British mathematician, is considered by some to be the father of theoretical computer science and artificial intelligence (AI).

Chances are the late genius would be fascinated by recent advances in AI, particularly Microsoft's ChatGPT, a new AI technology that can understand human speech and generate human-sounding, detailed content.

Indeed, the existence of ChatGPT and a brand new rival platform, Google's Bard, may call to mind for old movie aficionados the Hal-9000, the thinking computer from Stanley Kubrick's 1968 science fiction film, "2001: A Space Odyssey".

An article by Julianne Buchler on www.Medium.com suggested ChatGPT and Bard may meet the so-called "Turing test," which posits the following idea: If a technology creates an output and people have to ask whether a machine or a human created it, that technology must be considered to be intelligent.

ChatGPT, the website explains, can answer follow-up questions by a user, admit its own mistakes, challenge incorrect premises and reject inappropriate requests.

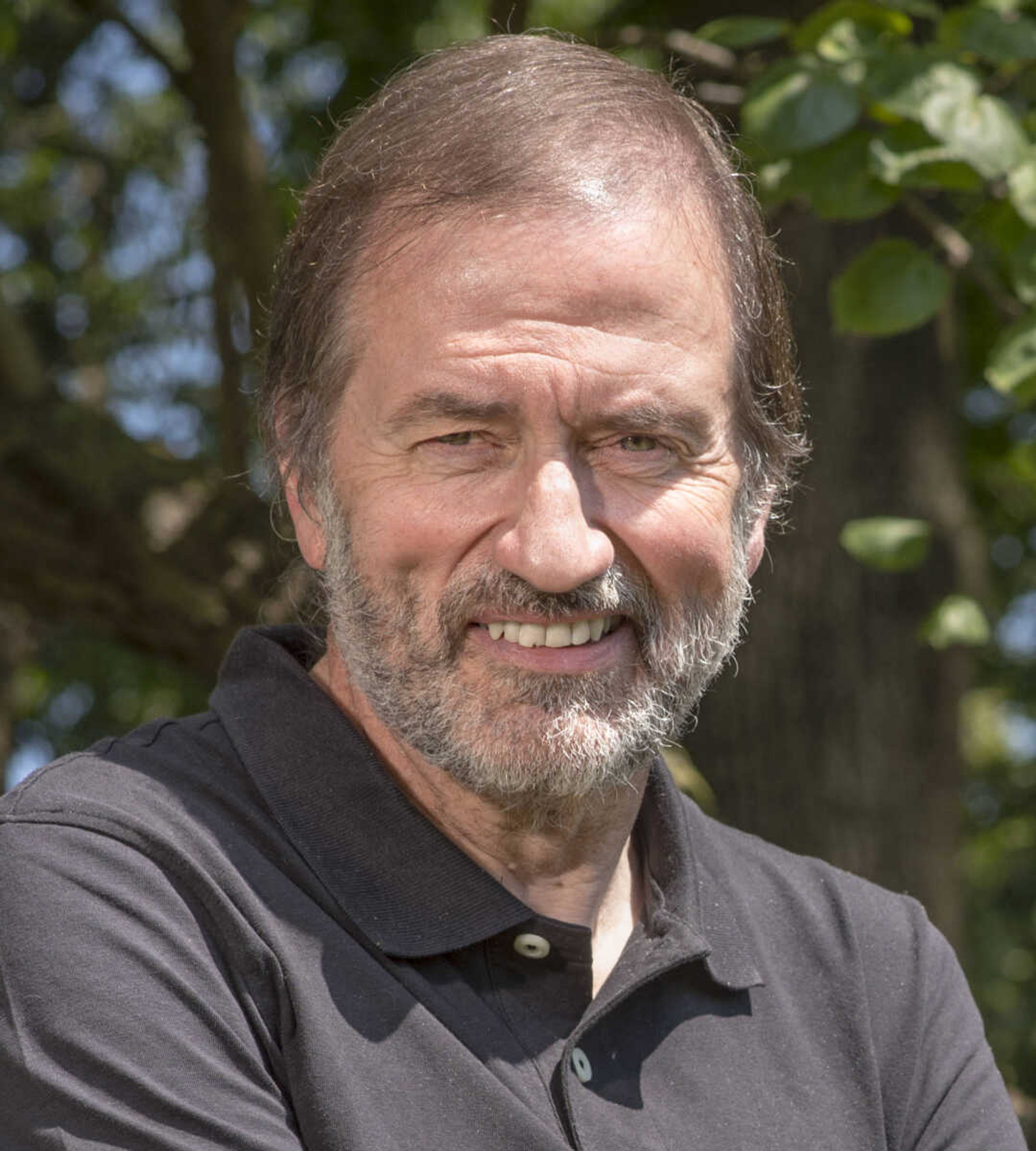

The Southeast Missourian put six questions to Bob Lowe, assistant professor of computer science at Southeast Missouri State University, who has been on the SEMO faculty since 2020.

A Knoxville, Tennessee, native, Lowe has worked in natural language processing and neural networking, and has done much reading about how the GPT-3 model was built and what it can do.

What does GPT-3 mean?

It's an acronym standing for Generative Pretrained Transformer 3, meaning its the third version of the tool to be released. In fact, Microsoft released the newest version, GPT-4, just last week. Basically, it's a computerized tool that creates — or generates — text using algorithms that are pretrained. Pretrained means the computer has already been fed all the data needed to carry out its task.

What is your specialized knowledge about AI?

In my research, I've had a concentration on plagiarism detection and authorship attribution. The Transformer models started to catch on in 2017. The basic ideas about how they work have been around since the late 1970s.

All the tech giants have gotten involved. Meta has a generative model. Google does, too, and I've heard rumors about Apple. The idea behind GPT is you give the computer program something which is converted into a string of tokens, which are essentially word numbers. If you have a vocabulary of 100,000 words, each word has a number. A user enters a prompt, a vector entry, so to speak, and that is used to create a probable next step. You take a part of a phrase which generates a response and the program then takes that response and generates more language. The computer has read a lot of text.

What is the upside of this technology?

You can use generative AI, regardless of the proprietary software being used, to summarize and query large blocks of text. You're querying a database of millions and millions of documents. What you get back from what you ask the computer is almost like a summary. It can also be used to generate an article.

What are the potential abuses of this application of AI?

Well, plagiarism for one. I can make ChatGPT do coding homework like what we give in our more basic classes here at SEMO. You could literally take one of our (computer) programming problems, enter it into ChatGPT and it will write a program for you — which is cheating. Of course, then, the student doesn't learn anything. When ChatGPT gets it wrong, it gets it really wrong but confidently wrong.

Do you see a danger to academic integrity with this technology?

Yes and no. What you get back sounds plausible with impeccable grammar, and it can write a paper for a student with citations. But the computer doesn't have any actual understanding. It's usually blatantly obvious but that's what we have now. The tech could get better down the line. I think, for the moment, if you generated a paper with ChatGPT, you might get a low C grade for a high D. If I were going to cheat today, I'd probably still do it the old-fashioned way and buy an essay from someone.

Have you had a student turn in a paper clearly processed using generative AI?

Not yet, but I have been looking for it. It might be harder to spot an AI-generated paper on computer code. It's going to get harder, though, for faculty in whatever field as the technology improves. There are some tools that can spot, within a certain probability, whether a text was created by a generative model rather than a human being. When someone writes something, there's a specific 'voice' to it, and ChatGPT is no exception because it also has its own voice. Chatbots, as time goes on, will make it harder for faculty to determine if students actually wrote the words they submit.

Of note

Signing up for limited access to ChatGPT is free at https://chat.openai.com.

Concerns

Buchler's article on www.Medium.com has identified three potential problems presented by the ready availability of generative AI.

- Fake news creation and distribution: Users could provide ChatGPT with the skeleton of a fake news story and ask it to write 100 versions and then distribute them via 100 Twitter or other social media accounts. Social media platforms are going to need to get smart about AI technology and how to address fake content.

- AI-generated school assignments: At this point, ChatGPT can't achieve the quality of a university-level paper, but it could easily do something credible for the grade school levels. The text might come out sounding a bit unnatural, but it would be easy for a student to modify it to sound human. Educators will have to evaluate the implications for assessment going forward.

- Cannibalization of jobs: There is the "dystopian" concern that this is already happening in the art world and in professions such as journalism and computer programming. It is unknown what this will look like going forward and whether and what new opportunities or jobs will be created.

Do you want more business news? Check out B Magazine, and the B Magazine email newsletter. Go to www.semissourian.com/newsletters to find out more.

Connect with the Southeast Missourian Newsroom:

For corrections to this story or other insights for the editor, click here. To submit a letter to the editor, click here. To learn about the Southeast Missourian’s AI Policy, click here.